|

For more details see my latest book

|

22nd December 2021

Neuron mechanisms supporting speech understanding

Neuron mechanisms supporting speech understanding

Use of a complex language is a key feature of the human species. Sequences of sounds presented within a structure called grammar can construct images of past situations and even situations which have never existed. In terms of neuron activity, how does this work and what is the role of grammatical rules?

AUDITORY AND VISUAL INFORMATION SPACES

The auditory input to the human brain is a relatively narrow information channel compared to visual. For example, the optic nerve carrying information from the eyes to the brain has about a million axons, while the cochlear nerve carrying auditory information from the ears only has around 30 thousand axons. The monomodal cortical areas processing visual inputs are much more extensive than the monomodal areas processing auditory inputs. Hence speech allows information from a smaller and simpler information space to access and manipulate information in a much larger information space.

In the visual cortical areas, the externally derived information is raw retinal inputs. Pyramidal neurons define receptive fields corresponding with very complex combinations of these raw retinal inputs that have often been present at the same time in the past. The complexity of the receptive fields increases moving from the visual primary area V1 through lower areas to higher areas. Individual receptive fields do not correspond with cognitive categories or behaviours, rather, each receptive field acquires a wide range of recommendation strengths in favour of different behaviours, each recommendation having its own independently determined weight. The behaviours with the largest total recommendation weights across all currently detected receptive fields are determined and implemented by the basal ganglia.

Because of the much larger visual information space, the most extensive ranges of behavioural recommendations are available by activation of visual receptive fields. Receptive fields in different ranges of information complexity, defined by different cortical areas, are most effective for discriminating between different types of objects or situations. For example, one area might be most effective for discriminating between different categories of visual objects. This area could be labelled ≈visual objects, where the ≈ symbol means discriminates effectively between. As mentioned earlier, individual receptive fields do not correspond with cognitive categories or behaviours. Discriminates effectively means that the population of receptive fields that is detected in response to an object of one category is sufficiently similar to the populations activated in response to other objects of the same category, and sufficiently different from the populations activated in response to objects of any other category. Sufficiently means that it is possible to assign ranges of recommendation strengths to each receptive field in such a way that the predominant recommendation in response to any object is almost always appropriate. So another way of looking at this labelling is that receptive fields in the ≈visual objects area are particularly effective for recommending behaviours in response to visual objects.

AUDITORY AND VISUAL INFORMATION SPACES

The auditory input to the human brain is a relatively narrow information channel compared to visual. For example, the optic nerve carrying information from the eyes to the brain has about a million axons, while the cochlear nerve carrying auditory information from the ears only has around 30 thousand axons. The monomodal cortical areas processing visual inputs are much more extensive than the monomodal areas processing auditory inputs. Hence speech allows information from a smaller and simpler information space to access and manipulate information in a much larger information space.

In the visual cortical areas, the externally derived information is raw retinal inputs. Pyramidal neurons define receptive fields corresponding with very complex combinations of these raw retinal inputs that have often been present at the same time in the past. The complexity of the receptive fields increases moving from the visual primary area V1 through lower areas to higher areas. Individual receptive fields do not correspond with cognitive categories or behaviours, rather, each receptive field acquires a wide range of recommendation strengths in favour of different behaviours, each recommendation having its own independently determined weight. The behaviours with the largest total recommendation weights across all currently detected receptive fields are determined and implemented by the basal ganglia.

Because of the much larger visual information space, the most extensive ranges of behavioural recommendations are available by activation of visual receptive fields. Receptive fields in different ranges of information complexity, defined by different cortical areas, are most effective for discriminating between different types of objects or situations. For example, one area might be most effective for discriminating between different categories of visual objects. This area could be labelled ≈visual objects, where the ≈ symbol means discriminates effectively between. As mentioned earlier, individual receptive fields do not correspond with cognitive categories or behaviours. Discriminates effectively means that the population of receptive fields that is detected in response to an object of one category is sufficiently similar to the populations activated in response to other objects of the same category, and sufficiently different from the populations activated in response to objects of any other category. Sufficiently means that it is possible to assign ranges of recommendation strengths to each receptive field in such a way that the predominant recommendation in response to any object is almost always appropriate. So another way of looking at this labelling is that receptive fields in the ≈visual objects area are particularly effective for recommending behaviours in response to visual objects.

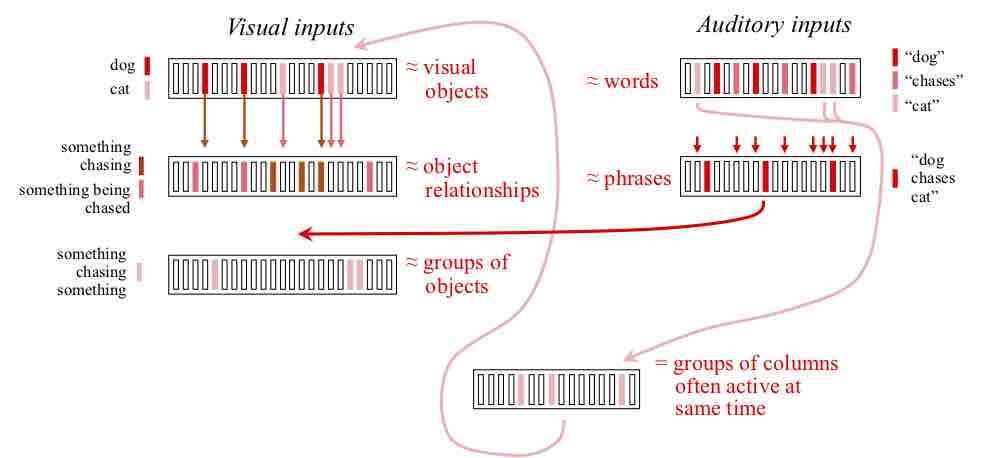

Different cortical areas define and detect receptive fields that are most effective for discriminating between different types of cognitive situations. The key receptive fields for behavioural purposes are those of layer V pyramidal neurons across all the columns in the area. The primary role of columns is to generate the information needed by the hippocampus to manage changes to receptive fields. As a result, within a column the layer V pyramidal neurons have somewhat similar but not identical receptive fields, and therefore have somewhat similar but not identical behavioural implications. The discrimination roles of different areas are illustrated conceptually, and combine the roles of the dorsal and ventral paths in visual processing

Other cortical areas might be effective for discriminating between different types of groups of objects (≈groups of objects), different types of groups of groups (≈groups of groups), or different complex situations (≈situations).

The auditory areas have a much more limited range of discrimination capability. Thus for example the detected auditory receptive fields can discriminate between different spoken words (≈words), phrases (≈phrases) or sentences (≈sentences), but although these discriminations contain information about the structure of the sound sequences, they contain little information about the actual meaning of the structures. However, these auditory receptive fields have the ability to indirectly activate visual receptive fields on the basis of temporally correlated past activity, and the indirectly activated fields carry much more meaning.

To understand how this works, the first step is to think about how direct visual experience is processed.

PROCESSING TO SUPPORT PERCEPTION

There are a series of steps in the way the brain processes information derived from the external visual environment in order to determine an appropriate externally directed behaviour. Each step is a release of information from the senses into a cortical area, between specific cortical areas, or out of the cortex to drive muscles. All such releases are behaviours that are recommended by cortical pyramidal neuron receptive field detections, selected by the basal ganglia, and implemented by the thalamus. A major externally directed behaviour is the end point of a long sequence of these more detailed behaviours.

Consider for example a situation in which two people are working on a fence, cooperating to manoeuvre a panel into position. Nearby, a person walking their dog has stopped to read a sign, and they have not noticed that their dog has escaped its lead and started chasing a cat. Suppose that one of the people working on the fence is positioned so that he can see the rest of the situation, and needs to determine an appropriate externally directed behaviour. One such behaviour could be to continue to work on the fence, avoiding the risk of dropping the panel. Another behaviour could be to call out to warn the dog owner. A third behaviour could be to prepare for the cat to jump onto the fence nearby. Yet other behaviours could be to warn the partner about the approaching cat and dog, or adjust the manipulation of the panel to prepare for the cat’s arrival.

There are seven visual objects that could be considered. These objects are the fence, the fence panel, the other person working on the fence, the dog, the cat, the sign, the person looking at the sign, and the lead for the dog. There are a number of different groups of these objects that are behaviourally relevant: the fence plus the panel plus the other person working on it; the sign plus the person reading it; the dog plus the lead plus the person holding the lead; the dog plus the cat; the cat plus the fence if the cat is heading that way; or self plus the other person working on the fence plus the dog if the dog looks dangerous.

In very simple terms, brain processing begins with sensory information derived from individual objects being released in succession into ≈visual objects cortical areas. The activity of different populations of receptive fields detected in response to several different objects is prolonged briefly. These different populations are maintained active at different phases of frequency modulation to avoid confusion between them. Next the outputs from those populations are brought into the same modulation phase (or synchronized), which effectively releases them to ≈groups of objects cortical areas. The activity of different populations of receptive fields detected in these areas in response to several different groups of objects is prolonged at different modulation phases, then the outputs from those populations are released to ≈groups of groups cortical areas, and so on. The result is the activation of multiple populations of receptive field detections. In these populations, each receptive field has a range of behavioural recommendations, and the external behaviour with the predominant weight across all these receptive fields is implemented.

Overall, this process means that attention is moved across all the objects, then information derived from the different objects is combined. At a more detailed level, to combine information derived from different objects in the same group, attention must be placed on the objects in the group in quick succession. The receptive fields detected within one object have recommendation strengths in favour of processing steps like prolonging the activity of various currently active populations, paying attention next to a specific type of object, and releasing the outputs of the currently active ≈visual objects populations to the ≈groups of objects area and so on.

Hence the brain processing needed to develop a response to the situation is a long sequence of attention behaviours (i.e. release of selected information derived from the senses), activity prolongation behaviours, and information release behaviours. All these behaviours must occur in the correct sequence to achieve a pattern of receptive field detections with the appropriate recommendation strengths in favour of externally directed behaviours. For example, in the situation we are thinking about, combining information from the fence panel, the lead for the dog and the sign will not lead to a ≈group of objects population with useful recommendation strengths in the current situation. The receptive fields currently detected in response to objects, groups of objects, groups of groups etc. will all have recommendation strengths in favour of various attention, prolongation and release behaviours and the behaviours with the predominant recommendation strengths will be selected and implemented.

In practice, processing of visual experience through the cortical areas involved is much more complex than this very simplified description. Different areas are able to discriminate between more subtle distinctions than just objects and groups of objects. The more subtle distinctions are needed to provide inputs to areas able to discriminate between complex situations with different behavioural implications.

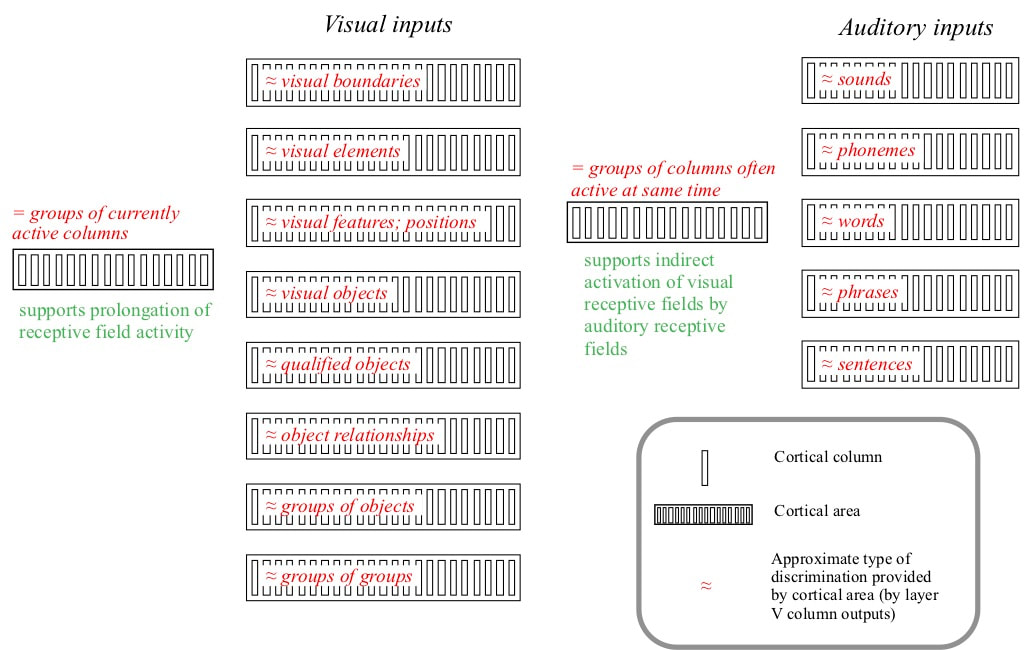

For example, consider how the visual experience of seeing a dog chasing a cat is processed. Attention is first focussed on the dog, and receptive fields on various levels of complexity are detected. Receptive fields in a ≈visual objects cortical area are able to discriminate between dogs and other types of objects. Receptive fields in an ≈object relationships area are able to discriminate between, for example, an object that is chasing something and an object that is being chased. The activity of the population of detections at these two levels is prolonged, and attention is focussed on the cat. Again, populations of receptive fields at the ≈visual objects and ≈object relationships levels of complexity are generated. The outputs from all the populations are then released to an area able to discriminate between different types of groups of objects, driving activation of a population that has predominant recommendation strengths appropriate in response to seeing a dog chase a cat.

ACCESSING VISUAL RECEPTIVE FIELD RECOMMENDATIONS VIA SPEECH

Speech is a well developed mechanism for using the small but very specific information space provided by auditory inputs to access the very wide range of behavioural recommendations available with visual receptive fields.

When an object is seen, some population of receptive fields is detected in multiple cortical areas. For any object, the detected population will generally be different from the population detected in response to any other object. However, for objects of the same category, although no receptive field will be detected on every occasion, visual similarities mean that some receptive fields in the ≈visual objects area will be detected more often than others. Hence it is possible to identify a set of visual receptive fields that are detected relatively often when an object of the category is seen. There will also be similarities between the sets of receptive fields in some auditory area activated when the same word is heard on different occasions. This auditory area could be labelled ≈words. In fact, because of the smaller auditory input information space, the ≈words populations detected in response to the same word heard on different occasions will generally be more similar than in the case of visual objects. Hence it is possible to identify a set of receptive fields that are detected relatively often when the same word is heard.

Words are learned by often hearing a word like “dog” at the same time as seeing an actual dog or picture of a dog. Over all these occasions, there is a set of ≈words receptive fields often active at the same time as a set of ≈visual objects receptive fields. Frequent simultaneous activity of a group of auditory neurons and a group of visual neurons means that in the future the activity of the group of auditory neurons can indirectly activate the visual group. Hearing the word therefore generates a pseudovisual experience as if a kind of weighted average of many past experiences of seeing a dog was being experienced. This activates all the recommendation strengths associated with the visual receptive fields, including for example those in favour of verbal descriptions of a dog.

When a word like “chase” is heard, visual fields often active in the past at the same time as the auditory fields will be activated. However, the past simultaneous activity will be accumulated across scenes in which many different objects chased many other different objects. In this case the ≈words receptive fields detected in response to hearing “chase” will not be often active at the same time as any ≈visual objects fields. However, these fields will often be active at the same time as some receptive fields in the ≈object relationships and ≈groups of objects areas. Hearing the word will therefore generate a pseudovisual experience as if something vague was chasing, something vague was being chased, and something vague was chasing something vague.

The auditory areas have a much more limited range of discrimination capability. Thus for example the detected auditory receptive fields can discriminate between different spoken words (≈words), phrases (≈phrases) or sentences (≈sentences), but although these discriminations contain information about the structure of the sound sequences, they contain little information about the actual meaning of the structures. However, these auditory receptive fields have the ability to indirectly activate visual receptive fields on the basis of temporally correlated past activity, and the indirectly activated fields carry much more meaning.

To understand how this works, the first step is to think about how direct visual experience is processed.

PROCESSING TO SUPPORT PERCEPTION

There are a series of steps in the way the brain processes information derived from the external visual environment in order to determine an appropriate externally directed behaviour. Each step is a release of information from the senses into a cortical area, between specific cortical areas, or out of the cortex to drive muscles. All such releases are behaviours that are recommended by cortical pyramidal neuron receptive field detections, selected by the basal ganglia, and implemented by the thalamus. A major externally directed behaviour is the end point of a long sequence of these more detailed behaviours.

Consider for example a situation in which two people are working on a fence, cooperating to manoeuvre a panel into position. Nearby, a person walking their dog has stopped to read a sign, and they have not noticed that their dog has escaped its lead and started chasing a cat. Suppose that one of the people working on the fence is positioned so that he can see the rest of the situation, and needs to determine an appropriate externally directed behaviour. One such behaviour could be to continue to work on the fence, avoiding the risk of dropping the panel. Another behaviour could be to call out to warn the dog owner. A third behaviour could be to prepare for the cat to jump onto the fence nearby. Yet other behaviours could be to warn the partner about the approaching cat and dog, or adjust the manipulation of the panel to prepare for the cat’s arrival.

There are seven visual objects that could be considered. These objects are the fence, the fence panel, the other person working on the fence, the dog, the cat, the sign, the person looking at the sign, and the lead for the dog. There are a number of different groups of these objects that are behaviourally relevant: the fence plus the panel plus the other person working on it; the sign plus the person reading it; the dog plus the lead plus the person holding the lead; the dog plus the cat; the cat plus the fence if the cat is heading that way; or self plus the other person working on the fence plus the dog if the dog looks dangerous.

In very simple terms, brain processing begins with sensory information derived from individual objects being released in succession into ≈visual objects cortical areas. The activity of different populations of receptive fields detected in response to several different objects is prolonged briefly. These different populations are maintained active at different phases of frequency modulation to avoid confusion between them. Next the outputs from those populations are brought into the same modulation phase (or synchronized), which effectively releases them to ≈groups of objects cortical areas. The activity of different populations of receptive fields detected in these areas in response to several different groups of objects is prolonged at different modulation phases, then the outputs from those populations are released to ≈groups of groups cortical areas, and so on. The result is the activation of multiple populations of receptive field detections. In these populations, each receptive field has a range of behavioural recommendations, and the external behaviour with the predominant weight across all these receptive fields is implemented.

Overall, this process means that attention is moved across all the objects, then information derived from the different objects is combined. At a more detailed level, to combine information derived from different objects in the same group, attention must be placed on the objects in the group in quick succession. The receptive fields detected within one object have recommendation strengths in favour of processing steps like prolonging the activity of various currently active populations, paying attention next to a specific type of object, and releasing the outputs of the currently active ≈visual objects populations to the ≈groups of objects area and so on.

Hence the brain processing needed to develop a response to the situation is a long sequence of attention behaviours (i.e. release of selected information derived from the senses), activity prolongation behaviours, and information release behaviours. All these behaviours must occur in the correct sequence to achieve a pattern of receptive field detections with the appropriate recommendation strengths in favour of externally directed behaviours. For example, in the situation we are thinking about, combining information from the fence panel, the lead for the dog and the sign will not lead to a ≈group of objects population with useful recommendation strengths in the current situation. The receptive fields currently detected in response to objects, groups of objects, groups of groups etc. will all have recommendation strengths in favour of various attention, prolongation and release behaviours and the behaviours with the predominant recommendation strengths will be selected and implemented.

In practice, processing of visual experience through the cortical areas involved is much more complex than this very simplified description. Different areas are able to discriminate between more subtle distinctions than just objects and groups of objects. The more subtle distinctions are needed to provide inputs to areas able to discriminate between complex situations with different behavioural implications.

For example, consider how the visual experience of seeing a dog chasing a cat is processed. Attention is first focussed on the dog, and receptive fields on various levels of complexity are detected. Receptive fields in a ≈visual objects cortical area are able to discriminate between dogs and other types of objects. Receptive fields in an ≈object relationships area are able to discriminate between, for example, an object that is chasing something and an object that is being chased. The activity of the population of detections at these two levels is prolonged, and attention is focussed on the cat. Again, populations of receptive fields at the ≈visual objects and ≈object relationships levels of complexity are generated. The outputs from all the populations are then released to an area able to discriminate between different types of groups of objects, driving activation of a population that has predominant recommendation strengths appropriate in response to seeing a dog chase a cat.

ACCESSING VISUAL RECEPTIVE FIELD RECOMMENDATIONS VIA SPEECH

Speech is a well developed mechanism for using the small but very specific information space provided by auditory inputs to access the very wide range of behavioural recommendations available with visual receptive fields.

When an object is seen, some population of receptive fields is detected in multiple cortical areas. For any object, the detected population will generally be different from the population detected in response to any other object. However, for objects of the same category, although no receptive field will be detected on every occasion, visual similarities mean that some receptive fields in the ≈visual objects area will be detected more often than others. Hence it is possible to identify a set of visual receptive fields that are detected relatively often when an object of the category is seen. There will also be similarities between the sets of receptive fields in some auditory area activated when the same word is heard on different occasions. This auditory area could be labelled ≈words. In fact, because of the smaller auditory input information space, the ≈words populations detected in response to the same word heard on different occasions will generally be more similar than in the case of visual objects. Hence it is possible to identify a set of receptive fields that are detected relatively often when the same word is heard.

Words are learned by often hearing a word like “dog” at the same time as seeing an actual dog or picture of a dog. Over all these occasions, there is a set of ≈words receptive fields often active at the same time as a set of ≈visual objects receptive fields. Frequent simultaneous activity of a group of auditory neurons and a group of visual neurons means that in the future the activity of the group of auditory neurons can indirectly activate the visual group. Hearing the word therefore generates a pseudovisual experience as if a kind of weighted average of many past experiences of seeing a dog was being experienced. This activates all the recommendation strengths associated with the visual receptive fields, including for example those in favour of verbal descriptions of a dog.

When a word like “chase” is heard, visual fields often active in the past at the same time as the auditory fields will be activated. However, the past simultaneous activity will be accumulated across scenes in which many different objects chased many other different objects. In this case the ≈words receptive fields detected in response to hearing “chase” will not be often active at the same time as any ≈visual objects fields. However, these fields will often be active at the same time as some receptive fields in the ≈object relationships and ≈groups of objects areas. Hearing the word will therefore generate a pseudovisual experience as if something vague was chasing, something vague was being chased, and something vague was chasing something vague.

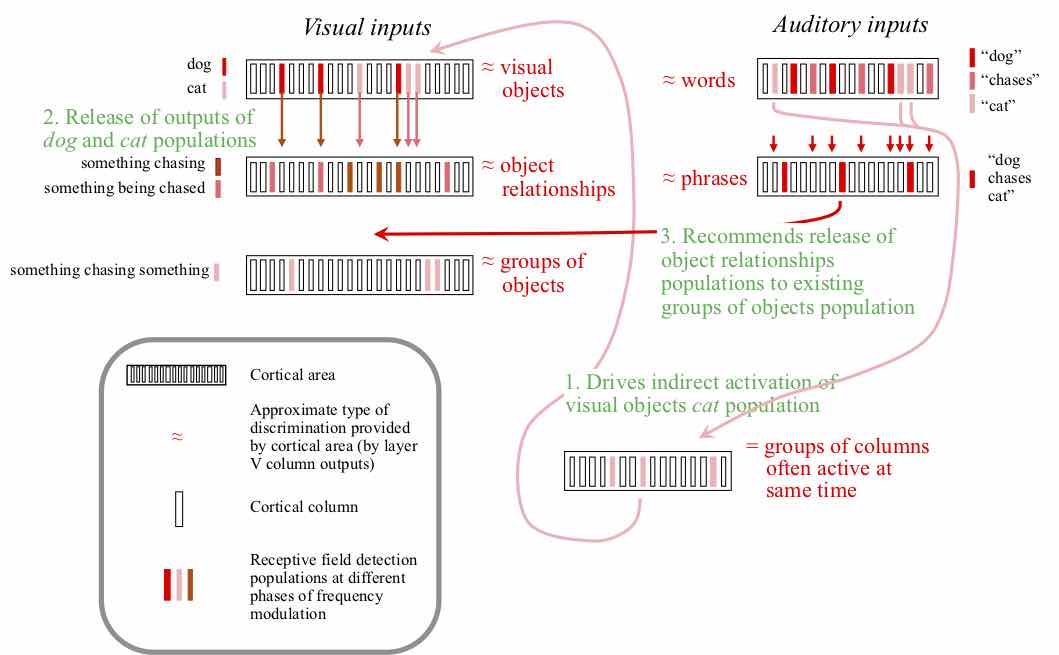

Three steps in processing that occur just after finishing hearing the spoken phrase “dog chases cat”, illustrated using an extremely simplified view of cortical areas. Several populations of receptive fields were already active, including a dog population in ≈visual objects, two chases populations in ≈object relationships and a third chases population in ≈groups of objects. The population of receptive fields in ≈words directly detected by hearing the word “cat” drives indirect activation of a second population of visual receptive fields in ≈visual objects at a different modulation phase from the dog population. Then the outputs from the dog population are synchronized with the existing something chasing population in ≈object relationships, and the outputs from the cat population synchronized with the existing something being chased population. This drives the generation of two enhanced populations corresponding with dog chasing and cat being chased. Finally, outputs from the three populations in ≈words are synchronized, driving activity in the ≈phrases area. The population in the ≈phrases area has a predominant recommendation strength in favour of synchronizing the outputs from the two populations in ≈object relationships with the existing something chasing something population in ≈groups of objects. These outputs drive an enhanced population in the ≈groups of objects area similar to the one that would be generated by actually seeing a dog chasing a cat.

Consider now how hearing the sentence “dog chases cat” can be processed. As in the case of visual perception, an appropriate sequence of behaviours is required. The first step following hearing the word “dog” is indirect activation of ≈visual objects receptive fields often active when looking at a dog. The activity of these receptive fields is prolonged. The next step, following hearing the word “chases”, is indirect activation of two populations of receptive fields in the ≈object relationships area (separated at different phases of frequency modulation) and one in the ≈groups of objects area as described in the previous paragraph. The activity of the three populations is also prolonged. The next steps, following hearing the word “cat”, are indirect activation and activity prolongation of ≈visual objects receptive fields often active when looking at a cat.

At this point there are five prolonged populations of visual receptive fields: populations corresponding with dog and cat in the ≈visual objects area; populations corresponding with something chasing and something being chased in the ≈object relationships area; and a population corresponding with something chasing something in the ≈groups of objects area.

The critical next steps are firstly that the outputs from the dog population must be brought into the same phase of frequency modulation as the something chasing population, and the outputs from the cat population must be brought into the same phase of frequency modulation as the something being chased population. The outputs from the two ≈visual objects populations then drive enhanced populations in the ≈object relationships area corresponding with dog chasing and cat being chased. These two populations are at different phases of modulation, but the next step is to bring the outputs from the two populations into the same phase as the already existing something chasing something population in the ≈groups of objects area. This results in an enhanced population in the ≈groups of objects area similar to one that would be activated by actually seeing a dog chasing a cat.

All these steps must be recommended at the right time by receptive field detections. For example, auditory receptive fields at the ≈phrases level will be detected once the complete phrase has been spoken. These receptive fields will contain information about the structure but not the content of the phrase, and must acquire recommendation strengths in the basal ganglia in favour of the releases from the two populations in the ≈object relationships area to the ≈groups of objects area.

A key point is that the prolongation and modulation behaviours must be done in the correct order and applied to the appropriate populations. For example, the dog population must be synchronized with the something chasing population, not the something being chased population. If different synchronizations were performed, the result could be a pseudovisual experience as if a cat were chasing a dog, or a cat and dog were both chasing something etc. The orders must be learned, and can differ between different languages.

Thus the grammatical order of words reflects the learned sequence in which prolongation and release behaviours are performed. In a simple phrase describing a dog chasing a cat, the dog is the subject (S), the cat is the object (O) and chase is the verb (V). For different languages, six different orders could be possible: SVO; SOV; VSO; VOS; OVS; and OSV.

At this point there are five prolonged populations of visual receptive fields: populations corresponding with dog and cat in the ≈visual objects area; populations corresponding with something chasing and something being chased in the ≈object relationships area; and a population corresponding with something chasing something in the ≈groups of objects area.

The critical next steps are firstly that the outputs from the dog population must be brought into the same phase of frequency modulation as the something chasing population, and the outputs from the cat population must be brought into the same phase of frequency modulation as the something being chased population. The outputs from the two ≈visual objects populations then drive enhanced populations in the ≈object relationships area corresponding with dog chasing and cat being chased. These two populations are at different phases of modulation, but the next step is to bring the outputs from the two populations into the same phase as the already existing something chasing something population in the ≈groups of objects area. This results in an enhanced population in the ≈groups of objects area similar to one that would be activated by actually seeing a dog chasing a cat.

All these steps must be recommended at the right time by receptive field detections. For example, auditory receptive fields at the ≈phrases level will be detected once the complete phrase has been spoken. These receptive fields will contain information about the structure but not the content of the phrase, and must acquire recommendation strengths in the basal ganglia in favour of the releases from the two populations in the ≈object relationships area to the ≈groups of objects area.

A key point is that the prolongation and modulation behaviours must be done in the correct order and applied to the appropriate populations. For example, the dog population must be synchronized with the something chasing population, not the something being chased population. If different synchronizations were performed, the result could be a pseudovisual experience as if a cat were chasing a dog, or a cat and dog were both chasing something etc. The orders must be learned, and can differ between different languages.

Thus the grammatical order of words reflects the learned sequence in which prolongation and release behaviours are performed. In a simple phrase describing a dog chasing a cat, the dog is the subject (S), the cat is the object (O) and chase is the verb (V). For different languages, six different orders could be possible: SVO; SOV; VSO; VOS; OVS; and OSV.

Possible word orders to communicate that a dog is chasing a cat:

SOV dog cat chases

SVO dog chases cat

VSO chases dog cat

VOS chases cat dog

OVS cat chases dog

OSV cat dog chases

SOV dog cat chases

SVO dog chases cat

VSO chases dog cat

VOS chases cat dog

OVS cat chases dog

OSV cat dog chases

These word orders reflect different orders in which sequences of indirect activation, prolongation and release behaviours are performed. The vast majority of human languages use either SVO or SOV. A relatively small proportion uses VSO, and use of the other three orders is rare. Although any order could be learned, some activation sequences can probably be managed more effectively.

One final note is that determination of the next activation behaviour by the basal ganglia takes a certain amount of time. This time can be reduced and processing speeded up by recording complete sequences in the cerebellum. The basal ganglia then selects the sequence as a whole, and the cerebellum drives the sequence to completion with no further reference to the basal ganglia.

One final note is that determination of the next activation behaviour by the basal ganglia takes a certain amount of time. This time can be reduced and processing speeded up by recording complete sequences in the cerebellum. The basal ganglia then selects the sequence as a whole, and the cerebellum drives the sequence to completion with no further reference to the basal ganglia.